Run a Stable LizardFS

Views: 6221

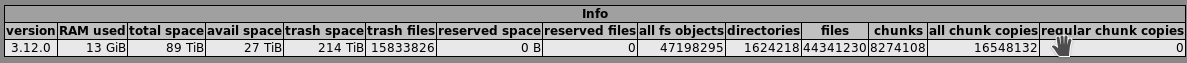

A LizardFS is very simple to setup. It can handle terabytes of data, but it has some pitfalls you should avoid. My system currently runs 14TB of real data, including duplicates and snapshots 65TB in 26.732.025 chunks on 4 chunk servers that provide 80TB of disk space. My master server uses 80GB RAM of currently 134GB installed RAM (the calculation of the fraudulent manufacturers: 16GB + 4×32GB = 134GB, so they sold me 160GB but I got 134GB).

For basic installation instructions, see my previous blog.

Basic Tips

- master server:

- install two master servers: one productive and one shadow

- masters only use one single CPU thread, so more cores don’t help

- masters require a huge amount of ram, so get servers with many free slots

- watch the memory usage on your masters and plug in more RAM before it is out of space

- master servers hang your whole host when they go out if RAM

- if you don’t have enough RAM, and the bugs (as of Jan 2019) will have been fixed, you may try to use BerkelyDB

- chunk server:

- chunk servers don’t need much RAM, mine use ~3GB

- CPU usage is low on chunk servers

- there must always be enough free disk space on all chunk servers

- if any of the chunk server runs out of disk space, you lose data

- metalogger server:

- run some metalogger server, they may prevent data loss when the master server crashes

- metalogger server don’t need much resources

- cgi server:

- a cgi server gives you a good overview of your resources

- cgi server don’t need much resources

- backup:

- add a shadow master server

- at least, backup

/var/lib/lizardfsexcludingchunk:

On a second server, add to daily cron job:/usr/bin/rsync -aq --exclude=chunk universum:/var/lib/lizardfs/ /var/backup/mfsmaster/

- at least, backup

- add metalogger servers

- distribute chunks on 2 or more chunk servers

- do daily, weekly, monthly (but not hourly) snapshots of your data

- add a shadow master server

Performance

After filling up my chunks with terabytes of data, the storage became very slow. Then I opened a ticket and discussed with the developers. As summary of this discussion, I recommend the following configuration options:

On the master servers set in /etc/lizardfs/mfsmaster.cfg:

LOAD_FACTOR_PENALTY = 0.5 ENDANGERED_CHUNKS_PRIORITY = 0.6 REJECT_OLD_CLIENTS = 1 CHUNKS_WRITE_REP_LIMIT = 20 CHUNKS_READ_REP_LIMIT = 100

In the chunk servers set in /etc/lizardfs/mfschunkserver.cfg:

HDD_TEST_FREQ = 3600 #CSSERV_TIMEOUT = 20 #REPLICATION_BANDWIDTH_LIMIT_KBPS = 1000 ENABLE_LOAD_FACTOR = 1 NR_OF_NETWORK_WORKERS = 10 NR_OF_HDD_WORKERS_PER_NETWORK_WORKER = 4 PERFORM_FSYNC = 0

As mount options, e.g. in /etc/fstab, use:

mfsmount /srv fuse rw,mfsmaster=universum,mfsdelayedinit,nosuid,nodev,noatime,big_writes,mfschunkserverwriteto=40000,mfsioretries=120,mfschunkserverconnectreadto=20000,mfschunkserverwavereadto=5000,mfschunkservertotalreadto=20000 0 0

Master Memory

I had a lot of trouble and crashes due to the master server going out of memory. There are many reasons why this can happen, i.e.:

- your filesystem grows

- your snapshots grow

- removing files (i.e. old snapshots) fills up the trash

Therefore, the main rue is: Give the master servers enough RAM and upgrade RAM before it runs out.

Use BerkeleyDB

There is another option: You can use BerkeleyDB to store file / directory names. This saves RAM but slows a bit down the master server. You may also increase the database cache from 10MB to e.g. 1GB (1024MB), so add to /etc/lizardfs/mfsmaster.cfg (the second line is optional; test your system with and without it):

USE_BDB_FOR_NAME_STORAGE = 1 #BDB_NAME_STORAGE_CACHE_SIZE = 1024

My experience: This option does not change anything. And currently it seems to be broken according to the reports here and here. Next try: It seems to use less memory, but is extremely slow with default caching, increasing the cache to 2048 works better, but it crashed after half a day. So: Do not use BerkeleyDB! (status Jan 1st 2019)

Trash Exhausting

If you remove a lot of files, your trash space may grow faster than it is cleaned up. I had this effect the first time, when I enabled hourly backups, kept 24 snapshots and deleted the oldest one every hour. My trash space was 214TB one day before the server crashed:

One possible solution to solve this problem is to set the trash time to zero, so nothing remains in trash and files are immediately removed, e.g. when the files are mounted to /srv:

sudo lizardfs settrashtime -r -l 0 /srv

Tony Travis am 24. Juni 2019 um 12:11 Uhr

Hi, Marc.

I used your recommendations to ‚tune‘ a LizardFS storage cluster I built for the non-profit Mario Negri Pharmaceutical Research Institute in Milan (https://www.marionegri.it). Although your suggestions improved our cluster performance quite a lot, we discovered that it imposed too much stress on the SATA2 controllers/backplanes and 1GB network I used to build the cluster causing multiple ‚chunkserver‘ failures. In addition, I found it necessary to enable «AVOID_SAME_IP_CHUNKSERVERS» because of the large number of disk failures when more than one chunk required for a given block was stored on the same host – It seems that a ‚chunkserver‘ in LFS is not a single host with a unique IP, but a combination of a host IP and a disk resource on it. Therefore, multiple ‚chunkservers‘ can, in fact, run on the same host. It seems a very dangerous default policy to me: Our chunkserver hosts have 8@8TB JBODs (i.e. LFS ‚chunkservers‘) formatted as ext4 filesystems and multiple disk failures on the same host resulted in missing chunks if (by random selection) chunks from the same block were stored on the same host.

Thanks very much for sharing you experiences with LizardFS!

Tony.

Marc Wäckerlin am 13. Juli 2019 um 09:59 Uhr

Thank you for the hint, I’ll try this. I am now running LizardFS fast and stable since months, but just this week, I had two times a failure (shows lost chunks after daily snapshot), which were both completely resolved by restarting the master.

I suspect one chunkserver which was at disk usage >90%. Now I added an additional 10TB disk. But perhaps your tipp could also help, I’ll try that.

selvam am 12. Mai 2020 um 05:50 Uhr

what about these parameters?

CHUNKS_LOOP_MAX_CPS

CHUNKS_LOOP_MIN_TIME

CHUNKS_LOOP_PERIOD

CHUNKS_LOOP_MAX_CPU

Marc Wäckerlin am 5. Dezember 2022 um 10:31 Uhr

I only explicitly set the values mentioned above, so it seems that the defaults for those work fine for me. I try to not change defaults as much as possible.